|

Kartik Sharma I’m an incoming MSR student at Carnegie Mellon University (Fall ’25) focused on building intelligent systems that learn and reason across modalities. Previously, I was a Senior Engineer at Samsung R&D Bangalore, where I built safety filters for Samsung’s LLMs/LVMs and shipped on-device models to flagship devices. I earned a dual degree in Computer Science & Economics from BITS Pilani, completing research with Prof. R. Venkatesh Babu (Long-tail Image Generation with StyleGANs) and Prof. Prof. Poonam Goyal (Multimodal Crop-yield Prediction). I now aim to advance RL + Multimodal reasoning for robotics with safe, transparent, real-world deployment. |

|

ResearchMy research interests are broadly at the intersection of vision–language, robotics, and reinforcement learning. I am fascinated by how humans integrate multiple sensory signals—sight, sound, and language—to reason and act in the world, and I aim to replicate this ability in artificial systems. I study multimodal learning and reasoning, focusing on fusing vision, language, and action to enable robust decision-making in dynamic environments. My prior work spans language–vision reasoning, domain adaptation under distribution shift, and robustness in long-tailed settings, leveraging tools such as contrastive learning for cross-lingual grounding, generative models (GANs/diffusion) for limited-data scenarios, and retrieval-augmented pipelines for LLMs. Going forward, I am particularly interested in exploring methods to incorporate human priors by learning transferable skill representations across humans and robots. I am open to exploring new research directions as well, so please feel free to reach out! |

Publications |

|

NoisyTwins: Class-Consistent and Diverse Image Generation through StyleGANs Harsh Rangwani, Lavish Bansal, Kartik Sharma, Tejan Karmali, Varun Jampani, R. Venkatesh Babu Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2023) Link to Paper / Project Page / Code |

|

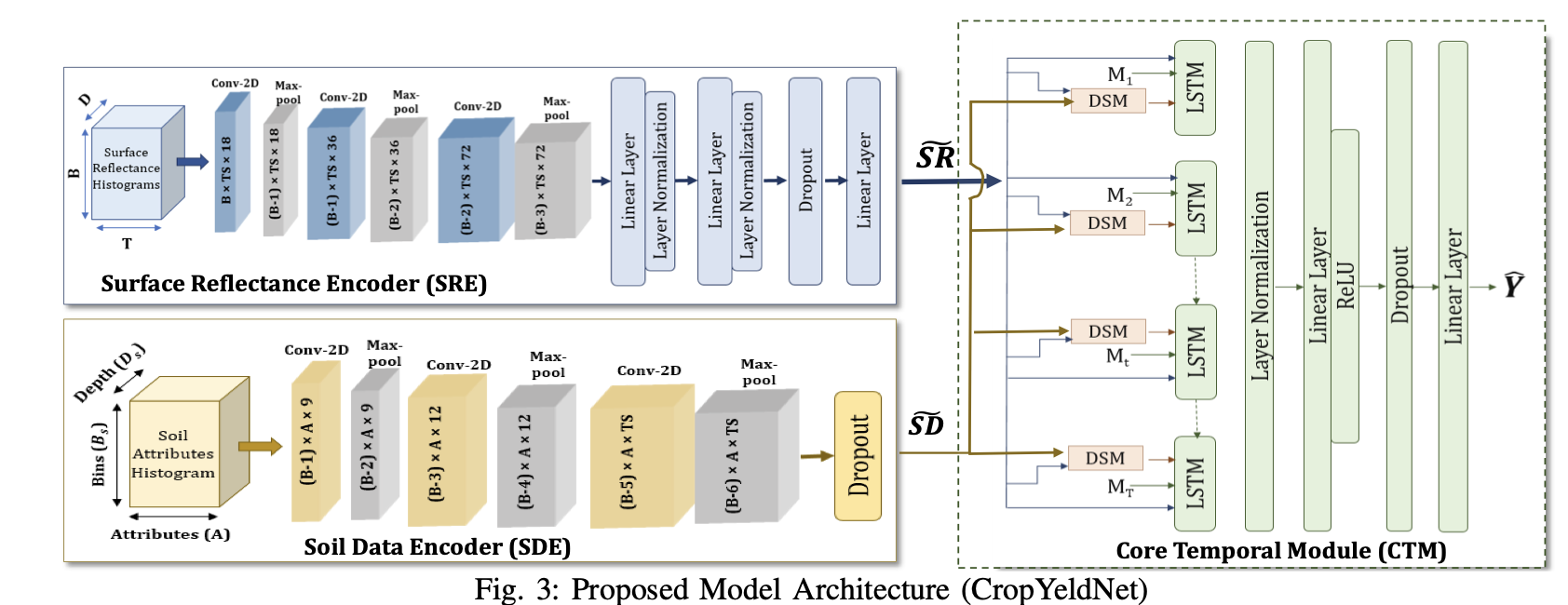

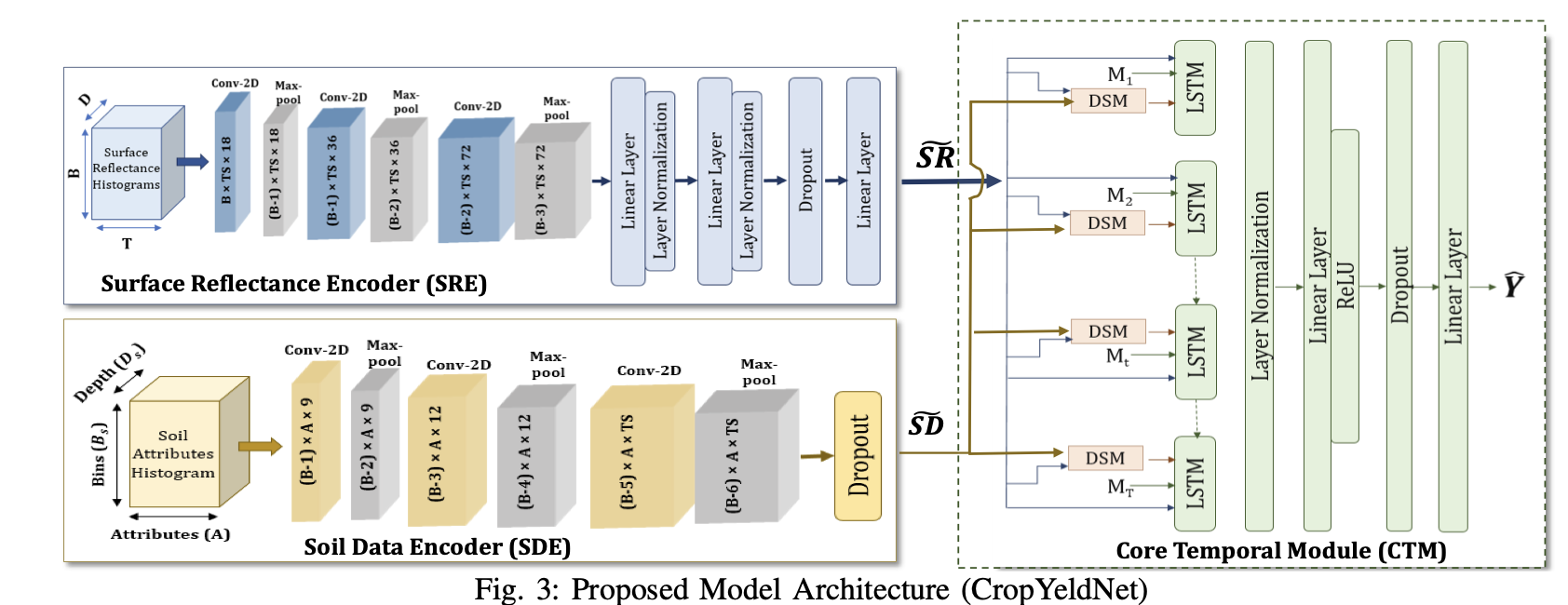

A Generalized Multimodal Deep Learning Model for Early Crop Yield Prediction Arshveer Kaur, Poonam Goyal, Kartik Sharma, Lakshay Sharma, Navneet Goyal IEEE International Conference on Big Data (Big Data 2022) Link to Paper |

Experience

Research Engineer — Samsung R&D Institute India (Nov 2023 – May 2025)

Data Scientist — PrivateBlok (Feb 2023 – Nov 2023)

Project Assistant — Video Analytics Lab, IISc Bangalore (Aug 2022 – Jan 2023)

Software R&D Intern — Samsung R&D Institute India (May 2022 – Jul 2022) |

Independent Projects |

|

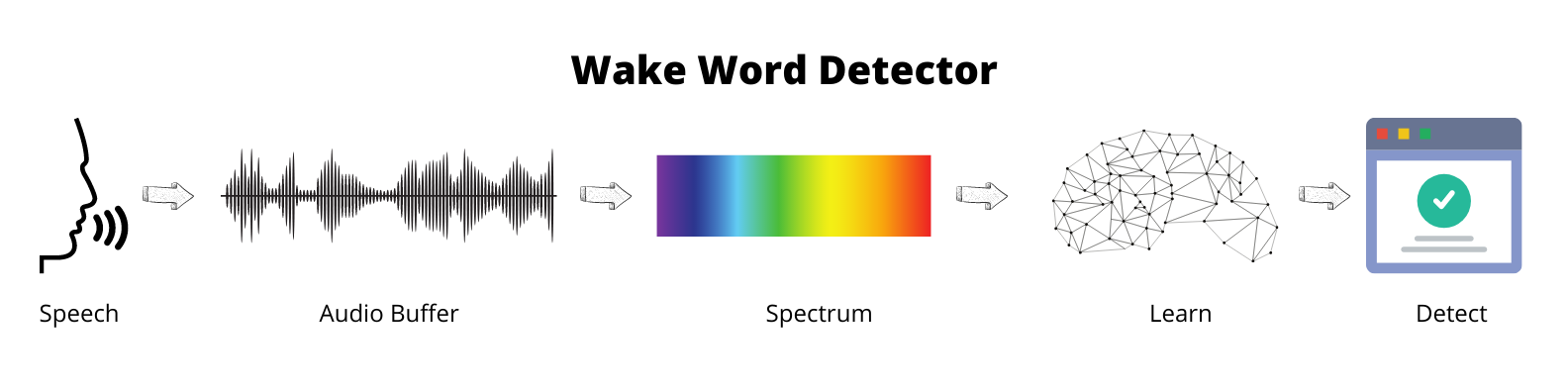

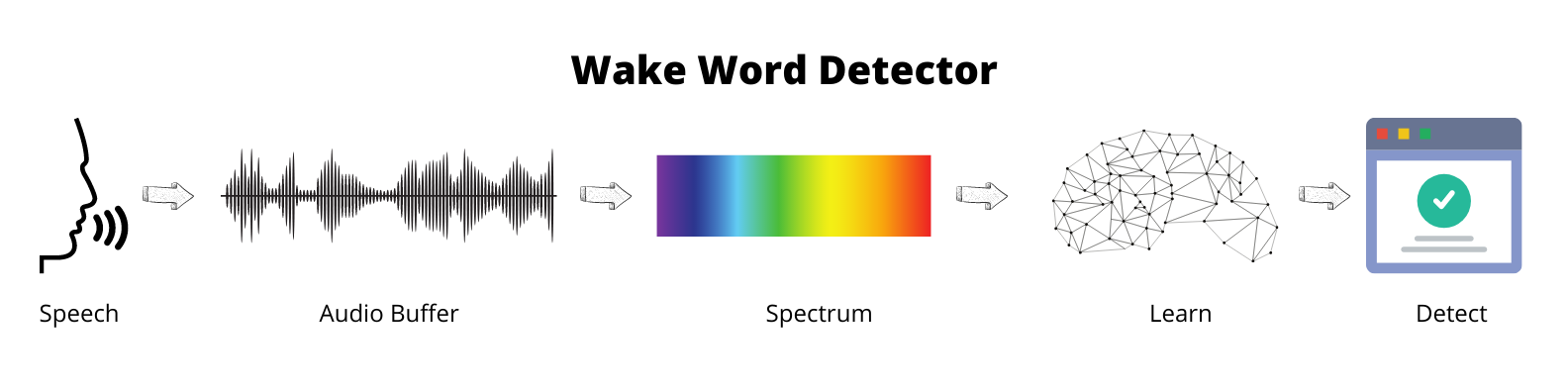

Wake-up Word Detection

Kartik Sharma Independent Project, July 2021 project page / code Constructed a speech dataset from synthesized data and implemented a trigger word detection model with over 90% accuracy. Trained a GRU(Gated Recurrent Units) to detect when someone has finished saying the word "activate". |

|

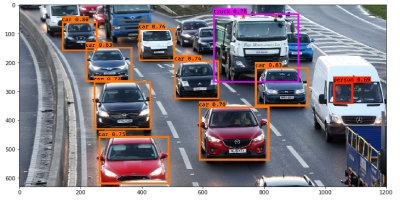

Car Detection with YOLO: You Only Look Once

Kartik Sharma Independent Project, June 2021 project page / code Implemented real-time object detection on a car dataset using the YOLO model, which was further improved using a U-net architecture. The YOLO model was stacked with Non-max suppression layers using IOU grid analysis to obtain the most accurate boundary boxes. |

|

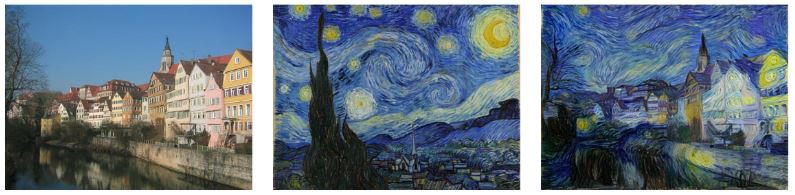

Art Generation with Neural Style Transfer

Kartik Sharma Independent Project, June 2021 project page / code Used transfer learning on the VGG-19 network to generate new artistic images. Implemented a cost function that minimizes the content and style cost by running both the images through the pre-trained VGG-19 model. |

|

Thank you for visiting my site, and if you have any inputs, I would love to hear from you! Feel free to steal this website's source code. Do not scrape the HTML from this page itself, as it includes analytics tags that you do not want on your own website — use the github code instead. |